Cognition is “the mental action or human intelligence processes of acquiring knowledge and understanding through thought, experience, and the senses”. Artificial Intelligence, therefore, can be defined as the simulation and automation of cognition using computers. Specifically applications of AI include expert systems, speech recognition and machine vision.

Today, self-learning systems, otherwise known as artificial intelligence or ‘AI’, are changing the way architecture is practiced, as they do our daily lives, whether or not we realize it. If you are reading this on a laptop or tablet or mobile, then you are directly engaging with a number of integrated AI systems, now so embedded in our the way we use technology, they often go unnoticed.

As an industry, AI is growing at an exponential rate, now understood to be on track to be worth $70bn globally by 2020. This is in part due to constant innovation in the speed of microprocessors, which in turn increases the volume of data that can be gathered and stored.

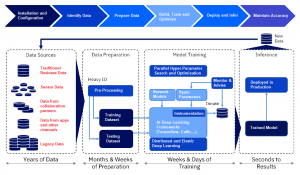

The following diagram depicts typical AI System workflow, AI frameworks provide the building blocks for data scientists and developers to design, train and validate AI models through a high-level programming interface and without getting into the nitty-gritty of the underlying algorithms. So, the reliability of AI is critically bound to two aspects – Data (sources & preparation ) and Inference (Training & Algorithms)

Perhaps, AI brings impressive applications, with remarkable benefits for all of us; but there are notable unanswered questions with social, political or ethical facets.

Even though the hype around AI is sky high, has the technology proven to be useful for enterprises?

As AI enables companies to move from experimental phase to new business models, a new study indicates errors can be reduced through careful regulation of human organizations, systems and enterprises.

This recent study by Thomas G Dietterich of Oregon State University reviews what are the properties of highly reliable organizations and how enterprises can modify or regulate the scale of AI? The researcher says, “The more powerful technology becomes, the more it magnifies design errors and human failures.”

And the responsibility lies with tech behemoths, the new High-Reliability Organizations (HROs) that are piloting AI applications to minimize risks. Most of the bias and errors in AI systems are built in by humans and as companies across the globe build great AI applications in various fields, the potential for human errors will also increase.

High-End Technology and Its Consequences

As AI technologies automate existing applications and create new opportunities and breakthroughs that never existed before, it also comes with its own set of risks, which are inevitable. The study cites Charles Perrow’s book Normal Accidents, written after a massive nuclear accident that delved into organizations which worked on advanced technologies like nuclear power plants, aircraft carriers, and electrical power grid among others. The team summarized five features of High-Reliability Organizations (HRO):

Preoccupation with failure: HROs know and understand that there exist new failure modes that they have not yet known or observed.

Reluctance to simplify interpretations: HROs build an ensemble of expertise and people so multiple interpretations can be generated for any event.

Sensitivity to operations: HROs maintains human resources who have deep situational awareness.

Commitment to resilience: Great enterprises and teams practice recombining existing actions. They have great procedures and acquire high skills very fast.

Under-specification of structures: HROs give power to each and every team member to make important decisions related to their expertise.

AI Systems and Human Organizations

There are some lessons that the researchers draw from many circles where advanced technology was deployed. Traditionally, AI history has been peppered with peaks and valleys and currently, the technology is seeing an exuberant time, as noted by a senior executive. As enterprises move to bridge the gap between hype and reality by developing cutting-edge applications, here’s a primer for organizations to dial down the risks associated with AI.

The goal of human organizations should be to create combined human-machine systems that become high-reliability organizations quickly. The researcher says AI systems must continuously monitor their own behavior, the behavior of the human team, and the behavior of the environment to check for anomalies, near misses, and unanticipated side effects of actions.

Organizations should avoid deploying AI technology where human organizations cannot be trusted to achieve high reliability.

AI systems should be continuously monitoring the functioning of the human organization. This monitoring should be done to check for threats to high reliability of the human organizations.

In summary, as with previous technological advances, AI technology increases the risk that failures in human organizations and actions will be magnified by the technology with devastating consequences. To avoid such catastrophic failures, the combined human and AI organization must achieve high reliability.