The difference between software architecture and software design is not really known to most of the people. Even for developers, the line is often shadowy and they might mix up elements of software architecture patterns and design patterns. In this blog, I would like to simplify these concepts and explain the differences between software design and software architecture. In addition, I will show you why it is important for a developer to know about software architecture and software design.

The Definition of Software Architecture

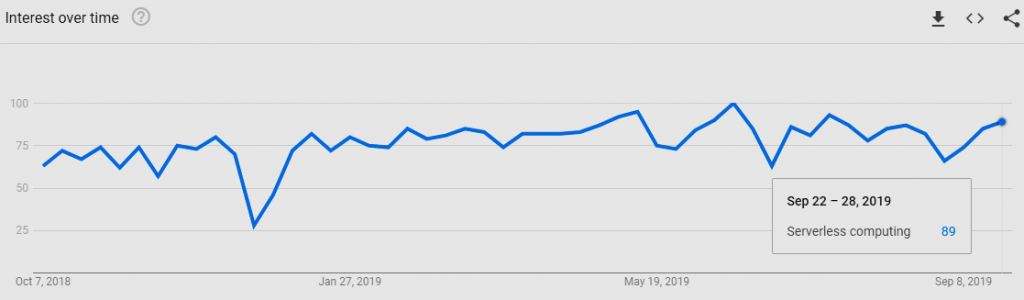

In simple words, software architecture is the process of converting software characteristics such as flexibility, scalability, feasibility, reusability, and security into a structured solution that meets the technical and the business expectations. This definition leads us to ask about the characteristics of a software that can affect a software architecture design. There is a long list of characteristics which mainly represent the business, functional & non-functional or the operational requirements, in addition to the technical requirements.

The Characteristics of Software Architecture

As explained, software characteristics describe the requirements and the expectations of a software in operational and technical levels. Thus, when a product owner says they are competing in a rapidly changing markets, and they should adapt their business model quickly. The software should be “extendable, modular and maintainable” if a business deals with urgent requests that need to be completed successfully in the matter of time. As a software architect, you should note that the performance and low fault tolerance, scalability and reliability are your key characteristics. Now, after defining the previous characteristics the business owner tells you that they have a limited budget for that project, another characteristic comes up here which is “the feasibility”.

Software Design

While software architecture is responsible for the skeleton and the high-level infrastructure of a software, the software design is responsible for the code level design such as, what each module is doing, the classes scope, and the functions purposes, etc.

If you are a developer, it is important for you to know what the SOLID principles is and how a design pattern should solve regular problems.

Single Responsibility Principle: means that each class has to have one single purpose, a responsibility and a reason to change.

Open Closed Principle: a class should be open for extension, but closed for modification. In simple words, you should be able to add more functionality to the class but do not edit current functions in a way that breaks existing code that uses it.

Liskov substitution Principle: this principle guides the developer to use inheritance in a way that will not break the application logic at any point. Thus, if a child class called “XyClass” inherits from a parent class “AbClass”, the child class shall not replicate a functionality of the parent class in a way that change the behavior parent class. So you can easily use the object of XyClass instead of the object of AbClass without breaking the application logic.

Interface Segregation Principle: Simply, since a class can implement multiple interfaces, then structure your code in a way that a class will never be forced to implement a function that is not important to its purpose. So, categorize your interfaces.

Dependency Inversion Principle: If you ever followed TDD for your application development, then you know how decoupling your code is important for testability and modularity. In other words, If a certain Class “ex: Purchase” depends on “Users” Class then the User object instantiation should come from outside the “Purchase” class.

Remember there is a difference between a software architect and a software developer. Software architects have usually experienced team leaders, who have good knowledge about existing solutions which help them make right decisions in the planning phase. A software developer should know more about software design and enough about software architecture to make internal communication easier within the team.